By: Esther Omolola and Melissa Dorvil

This upcoming November marks two years since artificial intelligence (AI) software ChatGPT launched, ushering in a new age of technology long in the making.

The rise of ChatGPT, short for Chat Generative Pre-Trained Transformer, reflects the global fascination amassed over the years as AI reshapes various careers and sectors. Renowned for its remarkable human-like conversation ability, the AI software can answer questions, perform tasks conveniently and help gain insights with its extensive dataset – all at an impressive speed.

Since its release, its integration into academic settings has sparked excitement and concern among educators and students alike, leading to much controversy and debate on whether this technology belongs in the classroom and what this means for the future of learning.

While Jack Kenigsberg, director of the Rockowitz Writing Center, makes clear that he is not a computer science expert, “All AI we have today often relies on a neural network, so AI must be trained to do a task” in a process known as machine learning.

Because “AI doesn’t have a brain, it can’t create an original thought”, Kenigsberg says, but AI can generate human-like texts in various forms such as emails and text messages or even academic writing assignments.

Users can even customize the outcomes of the tasks to their preferences by altering the size, structure, tone, depth and language. This customizable capability not only personalizes the experience but also allows students to use ChatGPT to meet the specific parameters of an assignment, making cheating that much more enticing.

AI models with extensive datasets like ChatGPT allow them to create a vast range of outputs, so the more specific your prompt is, the more precise the outcome will be.

“You can’t ask it to create something unrecognizable to it, but if you give it a set of parameters, it will custom create a response,” Kenigsberg warns.

Jeff Allred, an associate professor in the English department and past collaborator with Kenigsberg on faculty learning, recalled giving his students a take-home midterm exam last year, where he says students “discovered ChatGPT in sufficient numbers.”

“When the exam came back, I just felt so crushed and deflated,” he said. “All of these grammatically perfect kinds of B-plus answers to my questions came back and I just knew that they were all written by AI.”

Allred now opts to give students a traditional blue book midterm in that course to determine which students genuinely make the effort to keep up with the weekly readings and retain the material.

Gabriela Barahona, an English major in her junior year, expressed concerns about ChatGPT’s impact on classroom practices, having gotten a taste of the blue book experience.

“For three classes last semester, we no longer had take-home papers,” she observed. “My professor said explicitly it’s because of ChatGPT.”

AI platforms such as ChatGPT blur the lines of plagiarism.

“I didn’t think I could go after the students because it felt like it was two-thirds of them,” Allred stressed. “And then, of course, it’s not exactly plagiarism. You don’t exactly know which students used it, and to what degree, because it’s very hard to detect.”

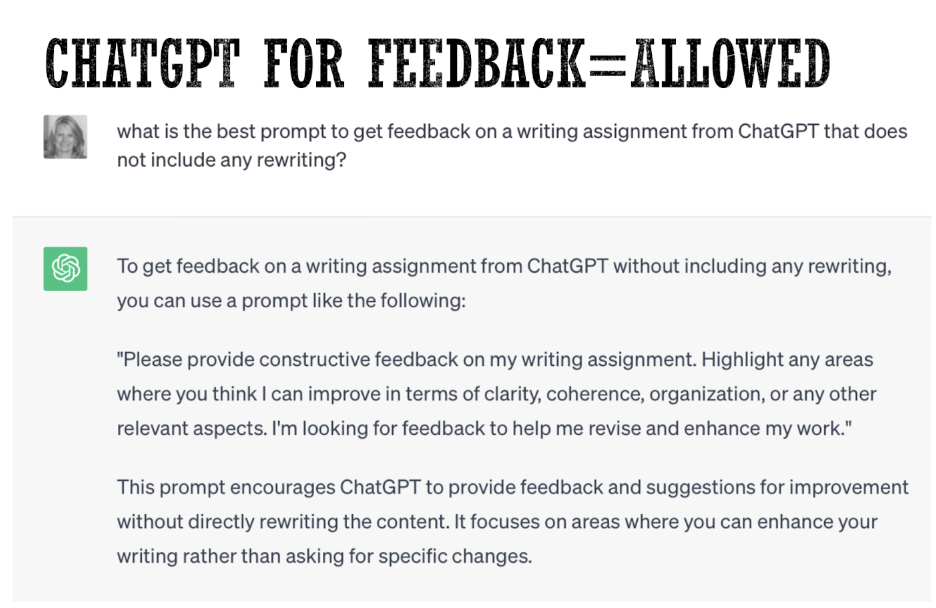

Some professors even use AI detectors like Turnitin, yet “It’s unclear how they actually work,” Kenigsberg comments. Detectors are not one hundred percent reliable as they can easily be evaded if the student rewrites the AI-generated content in their own words, which is still plagiarism.

Students and professors are left to their own vices as they scramble for definitive answers. Is simply consulting ChatGPT cheating? Do students have to disclose if they utilized AI or cite it in bibliographies? There’s still so much ground to cover on how AI and ChatGPT alike should ethically exist in a classroom.

“I wouldn’t say that’s cheating necessarily because, at the end of the day, it’s a shortcut to research rather than reading a bunch of articles,” Barahona said. “But, if you completely get an essay done by ChatGPT, then obviously it’s cheating.”

The ethical use of AI emerges as a central topic in discussions about its integration into academia. In response, several academic institutions have implemented bans on ChatGPT or any AI tools as a knee-jerk reaction to protect academic honesty.

When approached for their input in this article, many students declined, and expressed their hesitancy to even use ChatGPT out of fear of disciplinary measures.

Danika Murphy, a Media Studies student with a journalism concentration, adds another layer to this concern, emphasizing the broader implications of AI integration. She voices her worries about the potential damage of excessive reliance on AI, particularly in journalism.

“We’re relying on technology to simply copy and paste information without verifying it ourselves,” she says. “If we allow technology to be responsible for our thinking, we will become ignorant of certain matters. This is particularly problematic for the future, especially for those working in media or journalism, such as myself.”

Kenigsberg doesn’t believe ChatGPT will hinder student’s critical thinking in the long run. Allred shares this optimistic attitude.

“I do feel the need to resist the knee-jerk reaction, but also the uncritical embrace of it,” Allred says. “It’s our job as humanists to be critical and think through the problems of things in a nuanced way.”

As technology is designed to progressively improve our lives, AI can offer educators an opportunity to rethink traditional teaching practices and adapt to the new ways students think and engage with the world. At Hunter, students and faculty stress the importance of using AI responsibly, as plagiarizing content generated by AI violates academic integrity policies.

According to the CUNY website, instructors have the flexibility to monitor and decide whether to incorporate AI technologies while upholding the integrity of higher education. Hunter’s Office of Student Conduct periodically sends notices to students, reminding them of the updated policy. An email blast from Mar. 19, 2022, clearly defines that “unauthorized use of artificial intelligence generated content” is strictly prohibited.

AI tool utilization is only allowed “with explicit and clear permission of each individual instructor for a given course” the email read.

When it comes to faculty attitudes on integrating AI into the classroom, “it’s the most interesting conversation happening right now amongst faculty,” Kenigsberg remarks. Just last fall, Kenigsberg attended a CUNY-Wide conference as a panelist, where they discussed the ethical usage of AI as it grows increasingly popular across educational institutions.

Hunter’s faculty development center, ACERT (Academic Center for Excellence in Research and Teaching), also holds weekly lunchtime seminars, sometimes covering AI-related topics, including a recent event open to all students and faculty on generative AI tools such as Bard.

Despite the convenience of AI tools like ChatGPT, they also have limitations. Allred exemplifies poetry and comedic writing as challenges to ChatGPT. Both avenues rely on cultural context and nuanced language to pull off effectively. The former entails a creative use of metaphor, rhythm and wordplay. While the latter involves timing, irony and an understanding of human behavior. AI struggles to replicate the subtle aspects of each. Additionally, it has a habit of generating inaccurate responses to search queries.

Professor Sissel McCarthy, head of the journalism department at Hunter, cautions that “ChatGPT is not that great at literacy.”

“Number one, it makes a lot of mistakes,” McCarthy says. “You have to fact-check everything that AI says because it’s very prone to what we call hallucinations, where it makes up stuff.”

Fortunately, there’s potential to leverage these constraints to the professors’ advantage. Actively engaging with AI allows us to stay ahead of its developments and demystify the obscurities surrounding it. Professor Allred spotlights one of Kenigsberg’s insights to suggest the creation of questions outside the two modes ChatGPT does extremely well: summarization and comparison and contrast.

It is also a great starting point for ideas if you need a sense of direction. As an educator and interdisciplinary artist, Professor Andrew Demirjian suggests, “For a creative writing class, AI like ChatGPT can help you envision different speculative scenarios, like the look of a futuristic apartment or rituals for a fictive cult, then you would write a story that can flesh out these ideas and make them concrete with your original prose.”

In many cases, it can also illustrate what NOT to do in writing due to the superficial nature of the content it generates. AI can’t draw inspiration from personal experience and unique perspectives as Humans do.

The integration of AI into professional and educational settings has led the journalism department at Hunter to adapt teaching methods to responsibly incorporate AI in select courses while ensuring that students continue to develop critical thinking and writing skills.

One notable approach McCarthy uses in the “News Literacy in a Digital Age” and “Classroom to Career” courses, is utilizing AI to supplement education rather than replacing essential critical thinking and productivity skills. She also suggests redesigning assignments to focus on tasks that AI cannot perform, such as synthesizing personal experiences and course material.

The growing popularity of AI in the workplace has led to discussions about its potential to replace humans in various job industries. Barahona, who is considering a career in public policy, poses, “Why hire someone to read and write documents when you can get it done by AI for free in less time?”

Murphy is also worried about job displacement, noting that AI’s increasing presence in media could lead to significant layoffs, especially in environments where attention-grabbing headlines often trump accuracy, compromising the integrity of journalism.

“Journalism is based on truth,” she says. “Regardless of whatever comes next in terms of technology and artificial intelligence, this should always be remembered. People are owed the truth, and we as journalists have to provide the truth.”

McCarthy echoes this sentiment, citing examples of AI-generated stories riddled with errors that resulted in embarrassment and loss of credibility for news outlets like CNET and Buzzfeed. Despite the efficiency AI offers in analyzing data and generating story ideas, both Murphy and McCarthy emphasize that it cannot replace human journalists’ storytelling abilities and the importance of human connection in journalism.

While AI may enhance efficiency in certain tasks, journalists must maintain control over content creation and fact-checking processes to uphold standards and accuracy. As AI continues to evolve, it poses both challenges and opportunities for the journalism industry, prompting a reevaluation of how journalistic integrity is protected and the role of journalists in an increasingly automated field.

Such an approach potentially mitigates the fear of AI replacing human labor, pushing educators and students to think outside the box by focusing on areas where authentic human insight cannot be replicated.

The conversation on AI continues, but it all hinges on the collective readiness regarding if we’re ready to embrace AI. Students should inquire with each of their professors regarding their guidelines on AI and ChatGPT, so they know what to expect within that individual course. To many academic institutions across the country, AI is still relatively alien, subject to natural fears, curiosity and speculation.

However, as further discourse increases familiarity, maybe one day AI will be fully welcomed as a friend, not a foe, to the classroom.

Leave a Reply